For the last few years, the biggest question in recruitment technology was, "How fast can it hire?"

HR leaders wanted tools that could parse resumes instantly, schedule interviews automatically, and filter candidates while they slept.

But in 2026, the conversation has shifted. The most important question is no longer just about speed. It is about safety.

Recent industry discussions have highlighted a growing concern around "Black Box" AI tools—systems that rank or reject candidates using invisible criteria. Regulatory bodies across the world, from the US to India, are asking tough questions about how these algorithms make decisions.

For Talent Acquisition leaders, this is a moment to pause and evaluate. Are the tools in your stack empowering your recruiters, or are they quietly exposing your organization to compliance risks?

This guide breaks down the new rules of engagement for hiring technology. We will explore the "Black Box" risk, the key regulations you need to know (FCRA, GDPR, DPDP), and the simple guardrails you can put in place to hire with confidence.

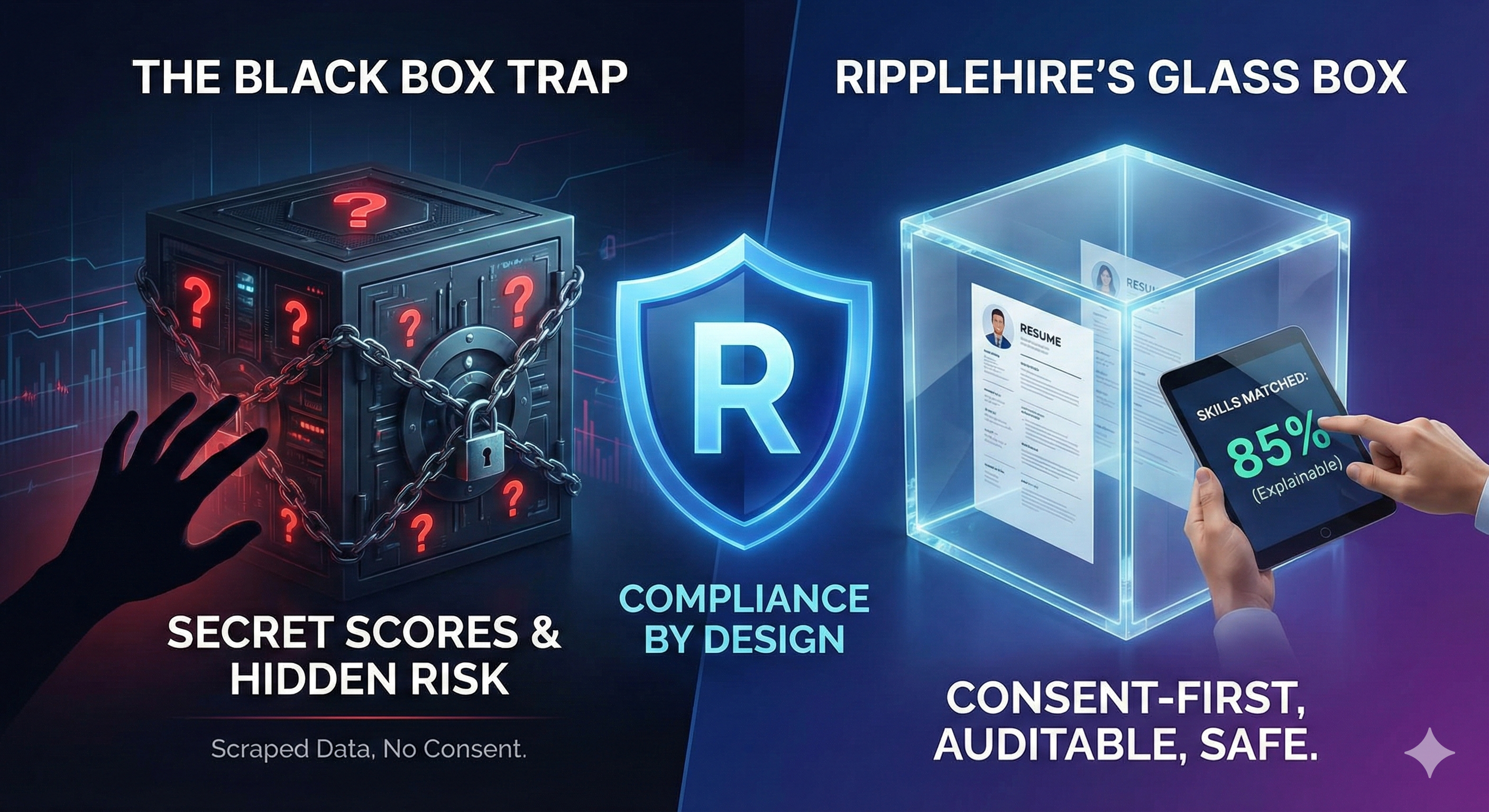

Understanding the "Black Box" Risk

To understand the risk, we first need to understand the difference between an "Assistant" and a "Gatekeeper."

An Assistant helps a recruiter work faster. It might organize resumes, highlight skills, or send emails. The recruiter is still the pilot; the AI is the co-pilot.

A Gatekeeper (or "Black Box" AI) takes over the decision-making process. It might look at a candidate’s profile and assign a hidden "fit score" or a personality label like "low potential." If the score is too low, the candidate might be rejected automatically without a human ever seeing their application.

The risk here is Transparency. If a candidate is rejected by a machine based on data they didn't provide (like scraped social media activity) or criteria they can't see, it creates a "trust gap." In many jurisdictions, it also creates a legal gap.

Why This Matters Now

Three major legal frameworks are shaping how we use data in 2026. You don't need to be a lawyer to understand the basics:

-

FCRA (Fair Credit Reporting Act) – The "Right to Know"

-

The Fact: While this is a US law, its principles are becoming a global standard. It generally states that if a third-party report (like an automated background score) is used to make an employment decision, the candidate has a right to know and a right to dispute it.

-

The Takeaway: If your software acts like a reporting agency by "scoring" people behind the scenes, you need to ensure you are notifying candidates properly.

-

-

GDPR (General Data Protection Regulation) – The "Human Rule"

-

The Fact: Under GDPR, individuals generally have the right not to be subject to a decision based solely on automated processing.

-

The Takeaway: Fully automated rejections are a high-risk activity. There should almost always be a human review step.

-

-

DPDP Act (Digital Personal Data Protection Act) – The "Purpose Rule"

-

The Fact: In India, this act mandates that data can only be used for the specific purpose the user consented to.

-

The Takeaway: If a candidate submits a resume for a specific job, that data cannot be used to train a global AI model or build a "shadow profile" without their clear, specific consent.

-

The "Red Flag" Checklist: How to Evaluate Your Tools

When you are speaking to a software vendor or auditing your current ATS, simple questions can reveal a lot about their safety philosophy.

Check 1: The "Human-in-the-Loop" Test

Ask: "Does this system automatically reject candidates based on a score?"

-

Red Flag: "Yes, it auto-filters the bottom 50% to save you time."

-

Green Flag: "No. The AI highlights relevant skills and matches, but a human recruiter must always click 'Reject' or 'Advance'."

-

Why: Keeping a human in the loop is the single most effective way to ensure fairness and compliance with Equal Employment Opportunity (EEO) guidelines.

Check 2: The Data Source Test

Ask: "Where does the data for candidate profiles come from?"

-

Red Flag: "We enrich profiles using data scraped from the open web and social media."

-

Green Flag: "We only evaluate the data the candidate explicitly provided to you during the application process."

-

Why: Data scraped from the web is often inaccurate ("Third-Party Data"). Evaluating candidates based only on what they gave you ("First-Party Data") ensures consent and accuracy.

Check 3: The Explainability Test

Ask: "If a candidate asks why they weren't selected, can the system tell me?"

-

Red Flag: "It’s a complex algorithm, so we can't point to one specific reason."

-

Green Flag: "Yes. We show you exactly which skills were missing or which criteria didn't match."

-

Why: You need Explainable AI. If you can't explain a hiring decision, you can't defend it.

The "Glass Box" Approach: Hiring Without Fear

At RippleHire, we believe that the best technology is transparent. We don't build "Black Boxes"; we build "Glass Boxes."

We operate strictly as a Data Processor. You (the employer) are the Data Controller. This means you set the rules, you own the data, and you make the decisions. Our job is to make your process efficient, auditable, and safe.

Here are the four guardrails we use to protect our customers:

1. Consent-First Architecture

We respect the "Purpose Limitation" principle of the DPDP Act.

-

How it works: We do not scrape the internet to build secret dossiers on candidates. RippleHire evaluates applicants only based on the information they provide to you.

-

The Benefit: You have a clean, compliant paper trail. Every data point used in the hiring decision has the candidate's consent attached to it.

2. Transparent Insights (Not Secret Scores)

We believe in showing our work.

-

How it works: Instead of a mysterious "Fit Score," we provide clear Skill Matches. . Our system highlights exactly which keywords, certifications, or experiences on the resume matched your job description.

-

The Benefit: Your recruiters can validate the AI's logic instantly. It builds trust instead of confusion.

3. The "Human-in-the-Loop" Guarantee

We design for recruiter enablement, not replacement.

-

How it works: Our automation handles the repetitive tasks—parsing resumes, scheduling interviews, verifying documents. But the critical decisions—Shortlisting and Offering—are always triggered by a human user.

-

The Benefit: This ensures that every hiring decision is accountable and human-centric.

4. Enterprise-Grade Security

Safety isn't just about AI; it's about data security.

-

SOC 2 Type 2 & ISO 27001 Certified: . These certifications prove that an independent auditor has verified our security controls over time.

-

GDPR & DPDP Ready: We have built-in features to handle "Right to be Forgotten" requests and data portability, ensuring you stay compliant with global privacy laws.

Trust is Your Best Asset

The hiring landscape is maturing. The "Wild West" days of unchecked AI are ending, and a new era of Responsible AI is beginning.

This is a positive shift. It means that organizations are prioritizing trust—trust with their candidates, trust with their data, and trust with their technology partners.

You don't have to choose between efficiency and compliance.

By asking the right questions and choosing partners who prioritize transparency, you can build a hiring engine that is fast, fair, and future proof.

Frequently Asked Questions (FAQs)

1. What is a "Black Box" AI in recruitment?

"Black Box" AI refers to automated systems where the internal decision-making logic is hidden. These tools often assign rankings or scores to candidates without explaining how the score was calculated, making it difficult for recruiters to justify decisions or for candidates to understand why they were rejected.

2. Why is "Human-in-the-Loop" important for compliance?

"Human-in-the-Loop" means that a human recruiter reviews the AI's suggestions before a final decision (like a rejection) is made. This is critical for compliance with laws like GDPR and EEO, which caution against fully automated decision-making that significantly affects a person's livelihood.

3. Does RippleHire scrape data from social media?

No. RippleHire follows a "Consent-First" architecture. We process only the data that candidates explicitly submit to your career portal or data you already legally hold. We do not scrape external websites to build shadow profiles.

4. What is the difference between an "Assistant" and a "Gatekeeper" AI?

An Assistant AI (like RippleHire) helps recruiters by organizing data, highlighting skills, and automating admin tasks, but leaves the final decision to the human. A Gatekeeper AI autonomously filters and rejects candidates based on its own criteria, acting as the decision-maker.

5. How does the DPDP Act affect my hiring process in India?

The DPDP Act requires that you obtain specific consent from candidates for the data you collect and use it only for that specific purpose. You cannot use candidate data for unrelated purposes (like training third-party models) without fresh consent. RippleHire helps manage these consent flows automatically.

6. What certifications should I look for to ensure an ATS is safe?

At a minimum, look for ISO 27001 (Information Security) and SOC 2 Type 2 (Operational Security). These certifications indicate that the vendor has rigorous, audited controls in place to protect your data.

7. Can AI help with diversity without introducing bias?

Yes, if it is "Explainable AI." When AI focuses strictly on objective skills and experience (rather than names, locations, or pedigree) and shows its logic, it can help recruiters make more consistent, unbiased decisions. "Black Box" models, however, can hide and perpetuate historical biases.

8. What is a "Data Processor" vs. a "Data Controller"?

In the context of hiring software: You (the Employer) are the Controller who decides why and how to hire. RippleHire is the Processor that handles the data on your behalf. This distinction is important because it means we do not "own" your candidate data or use it for our own benefit; we simply process it according to your instructions.